Quantum theory and classical probability theory are special cases of general probabilistic theories (GPTs), i.e. conceivable physical theories that describe correlations of detector clicks. They are studied to improve our understanding of quantum theory -- for example, by reconstructing it -- and to explore alternative models of physics or computation. Our group has contributed important insights into the structure of GPTs and their relation to physics.

What is a GPT?

GPTs are rigorous mathematical theories that generalize both classical and quantum physics (in particular, their statistical predictions). The GPT framework is based on absolutely minimal assumptions — essentially, it contains only structural elements that represent self-evident features of general laboratory situations. It admits a large class of theories, with QT as just one possible theory among many others.

A thorough, mathematically rigorous, yet pedagogical introduction to GPTs can be found in my “Les Houches lecture notes” [2]; other introductions (though with slightly different formalism) can be found in Refs. [16, 27], for example. Here, I will only give a very sketchy and incomplete overview.

The paradigmatic laboratory situation considered in the GPT framework is the one sketched in the figure below: the preparation of a physical system is followed by a transformation and, finally, by a measurement that yields one of several possible outcomes with some well-defined probability.

The results of the preparation procedure are described by states, and the set of all possible states in which a given system can be prepared is its state space. Every possible state space defines a GPT system, up to a single constraint: we want to be able to toss a coin and prepare one of two given states at random, with a certain probability. This introduces a notion of affine-linear combinations on the state space, which (together with a notion of normalization) implies that state spaces are convex subsets of some vector space over the real numbers.

Transformations map states to states, and they must be consistent with the preparation of statistical mixtures, i.e. they must be linear maps. Outcome probabilities are described by linear functionals ("effects") on the space of states. And this is essentially all that is assumed.

Two special cases are of particular importance:

- Quantum theory (QT). Systems are characterized by an integer n (the maximal number of perfectly distinguishable states), and the states are the (n × n) density matrices. The transformations are the completely positive, trace-preserving maps, and the effects are given by positive semidefinite operators with eigenvalues between 0 and 1 (POVM elements).

Among the transformations, the reversible transformations (those that can be undone) are the unitary maps. - Classical probability theory (CPT). For given n, the state are the n-outcome probability vectors. The transformations are the channels, i.e. stochastic matrices, and effects are given by non-negative vectors. The reversible transformations are the permutations of the configurations.

In addition to QT and CPT, there is a continuum of GPTs with different kinds of physical properties. For example, a GPT called "boxworld" contains states that violate Bell inequalities by more than any quantum state [16]. Other GPTs predict interference of "higher order" than QT [30], a prediction that can in principle be tested experimentally [31]. Note that typical GPT do not carry any kind of algebraic structure -- there is in general no notion of "multiplication of observables".

The "landscape" of GPTs provides a simple and extremely general framework in which QT can be situated. GPTs have a number of properties in common with QT:

- They satisfy a no-cloning theorem (yes, also classical probability theory does!), most GPTs have non-unique decompositions of mixed states into pure states, they have a notion of post-measurement states, and many of them allow for a notion of entanglement similar to QT.

But they also differ in many ways. Some examples have been given above; here are more:

- Most GPTs do not have a notion of "orthogonal projection", and there is in general no correspondence between states and measurement results (i.e. states and effects are different sets of vectors); "energy" is typically a superoperator rather than an observable, and typically there are pure states that are not connected by reversible time evolution.

Given this extremely general framework of theories, one can then go ahead and explore the logical architecture of QT (and our world): if a GPT has property A, does it also have to have property B? Which kind of GPTs fit into spacetime physics or thermodynamics as we know it? Could some GPTs even describe physics in some hitherto unknown regime?

A research project on its own is to write down a set of simple principles that "picks out" QT from the set of all GPTs. This is the program of reconstructing QT, and our group has made substantial contributions to this research program. Here, we describe some of our contributions that are not directly related to the reconstructions.

If nature were maximally nonlocal, then it would not admit any reversible dynamics

While QT violates Bell inequalities, it does not do so in the maximal way: there are conceivable states (for example "Popescu-Rohrlich boxes") that do not admit superluminal signalling, but that would violate (if they existed) Bell inequalities by more than any quantum state.

In Ref. [], we have studied the most general GPT that admits such states: boxworld. We have shown that boxworld has an important deficit: it admits only trivial dynamics. That is, all reversible transformations (analogues of QT's unitaries) would act only on single systems, or would permute subsystems, but would never correlate any systems. No reversible interaction would be possible. In particular, reversible time evolution could never create entangled state, and PR boxes would be "scarce resources" that would soon become depleted.

This suggests a kind of "trade-off" for QT: quantum theory admits the violation of Bell inequalities, but only up to an amount that still allows a rich set of reversible dynamics.

The black hole Page curve beyond Quantum Theory [x]

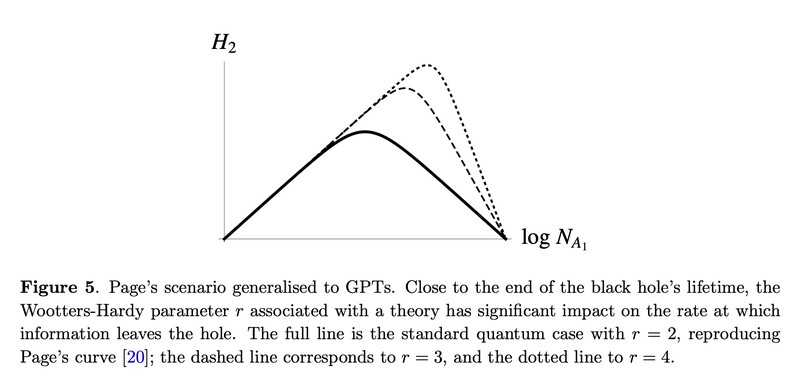

The Page curve appears in the context of the black hole information problem: a black hole starts to form in a pure quantum state. As it begins to emit photons, entanglement between the hole and the photons leads to an increase of the radiation's entropy. However, due to unitarity, this entropy must decrease again when the black hole reaches half of its lifetime (as shown by Page under some assumptions), decreasing to zero when it has vanished. Semiclassical calculations, however, predict a linear growth of entropy. It is then interesting to ask about the source of the discrepancy.

In Ref. [], we have generalized Page's calculation beyond QT.

TO BE CONTINUED SOON.

Müller Group

Markus Müller

Group Leader+43 (1) 51581 - 9530

Manuel Mekonnen

PhD Student